The Immersive Content Gap

Since the Apple Vision Pro launched, we’ve seen impressive demos of immersive spatial videos and interactive stories designed specifically for visionOS. Apple even hosted an event titled “Create interactive stories for visionOS” highlighting interaction design, storytelling techniques, and performance optimization for these new experiences.

While these purpose-built immersive experiences are impressive, they represent just a fraction of the content we consume. The reality is that most media companies aren’t going to rebuild their entire catalogs for spatial computing. The target audience remains relatively small, and the production costs are substantial.

But what about the countless number of regular movies and TV shows we already love? Is there a way to enhance these conventional experiences without changing the existing production process?

Metadata: The Overlooked Dimension of Immersion

I believe there is—through the intelligent use of metadata.

DVDs gave us deleted scenes, commentary tracks, and other “extras” that enhanced our viewing experience. Xbox SmartGlass promised a supplemental second-screen experience. Today, Amazon Prime Video has X-Ray and Apple TV+ has InSight, both providing additional information layers over currently playing content.

However, these features are often limited to specific content libraries where the metadata has been manually curated. What if we could extend this capability to any video content using AI?

A Vision for Video Metadata on Apple Vision Pro

I’ve created a concept video demonstration showcasing how Apple Intelligence (coming to Vision Pro in April) could transform ordinary video viewing into something more interactive and immersive. Here’s how it works:

The Basic Information Layer

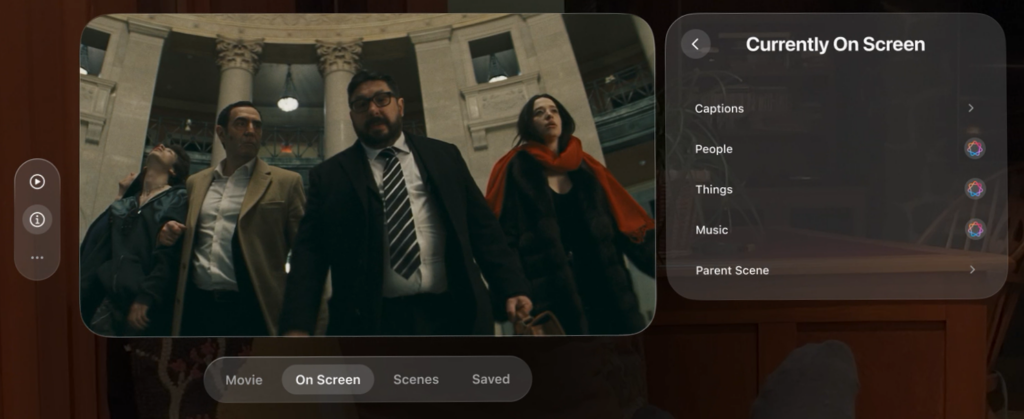

When watching a movie, a simple tap brings up the “Currently On Screen” menu, offering access to:

- Saved Items: A collection of moments you’ve bookmarked for later exploration.

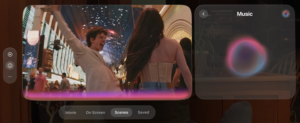

- Scenes/Chapters: A visual grid of frames representing different scenes or chapters, with hover-to-preview functionality. This visual navigation makes finding specific moments intuitive. Often the chapter data is present, but AI scene/cut detection is well established tech.

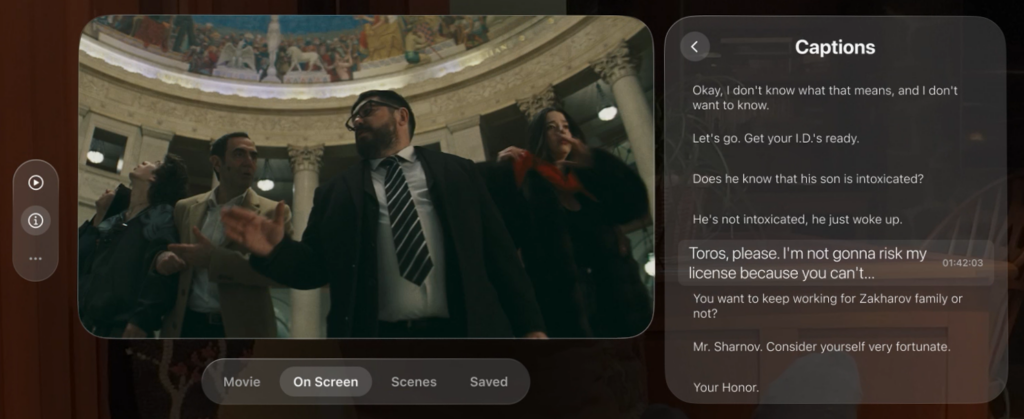

- Captions: Navigate through dialogue, with each line linked to its corresponding timestamp in the video. This makes finding favorite quotes or key plot points effortless. This data is already available in commercial videos.

The AI-Enhanced Layer

For content without pre-curated metadata, Apple Intelligence can help bridge the gap.

- People Identification: Using the actor list from sources like IMDb, facial recognition can identify who’s on screen at any given moment. Select an actor to view their filmography or character information. Already knowing which actors are in the movie, identification is a simple matter of choosing within that limited set of faces.

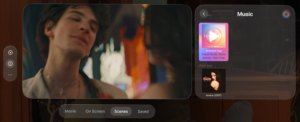

- Music Detection: Similar to Shazam, the system can identify songs playing in the background and link to Apple Music or other music streaming services. Very simple affiliate play.

- Product/Outfit Recognition: Identify clothing items or products seen on screen, with the potential to offer similar items at various price points—turning any movie into a shoppable experience.

All AI-generated information is clearly marked with the special Apple Intelligence AI rainbow coloring, maintaining transparency about what’s curated versus what’s algorithmically determined.

True Spatial Interaction

What makes this truly compelling on Vision Pro is the spatial interaction:

- Long-press on a character to “pull” them into a separate immersive window

- Scrub through frames featuring that character

- Ask Siri natural questions about what you’re seeing: “What jacket is that?” or “Where is this scene filmed?”

Why This Matters

There are several compelling reasons why this approach makes sense:

- Practical Implementation: The foundation technologies already exist—facial recognition, music identification, and object detection. We’re not reinventing the wheel, just applying existing capabilities in a new context.

- No Disruption to Viewing: Unlike second-screen experiences, this approach keeps everything in one device and one field of view. And with Vision Pro being a personal device, exploring metadata doesn’t disrupt others’ viewing experiences.

- Content Longevity: This approach gives new life to existing content libraries without requiring expensive production overhauls. It’s an additive layer, not a replacement.

- Personalization: The ability to bookmark and explore what interests you creates a more personalized viewing experience.

Technical and Legal Considerations

Of course, there are considerations:

- DRM and Rights Management: Accessing and manipulating protected video content presents technical and legal challenges.

- More Legal: Sometimes it is necessary to review actor contracts around possible perception of product endorsement. Fortunately, the UI paradigm of spawning new windows for additional functionality provides a visual and logical abstraction from the actors on screen.

- AI Accuracy: The system would need to be transparent about the confidence level of its identifications and provide clear indicators when information is AI-generated.

- Privacy: Facial recognition and other identification technologies raise privacy questions that would need careful handling.

- VR: None of this really needs a VR experience.

Beyond Movies: The Bigger Picture

While I’ve focused on movies, this approach could extend to other content:

- Educational Videos: Identify concepts, link to additional resources, or generate study materials

- Sports: Identify players, pull up statistics, or analyze plays

- Travel Shows: Map locations, provide additional cultural context, or link to travel planning resources

The Future of Media Consumption

The future of media isn’t just about creating new immersive content—it’s also about enhancing how we interact with the content we already have. By layering intelligent metadata over conventional video, we can create more engaging, informative, and personalized experiences without requiring massive changes to existing content libraries.

This approach represents a practical middle ground between traditional passive viewing and fully immersive spatial experiences. It acknowledges the reality that most content will remain in traditional formats for the foreseeable future, while still leveraging the unique capabilities of devices like Apple Vision Pro.

Having worked with media companies on metadata experiences in the past, I’m excited about the potential here. What we need isn’t just more immersive content—we need smarter ways to immerse ourselves in all content.

This concept demonstration was created to illustrate possibilities for the Apple Vision Pro. The technologies discussed exist today but would require integration and implementation within Apple’s ecosystem.